In October 2021, IDEA presented its first demonstration of 3D streaming using the Immersive Technologies Media Format, ITMF. In that demo we revealed the possibilities of taking an ITMF file, extracting the scene description and assets, then streaming to a client from a network-based server. That demo can be viewed here.

The October demo showcased an early prototype of the 3D streaming platform, focusing on a small number of 3D objects streamed to a single client with attached display. Since then, engineers from IDEA members Charter Communications, OTOY, and CableLabs have worked together to improve the architecture and the pipeline for streaming 3D, and now have prepared a demonstration that takes 3D streaming to a new level.

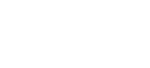

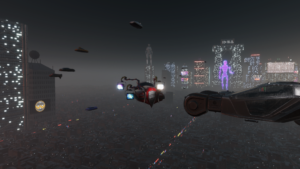

The new demo, which takes place May 4th, 2022, will involve the streaming of hundreds of 3D objects in a complete 3D scene, creating a seamless 3D experience for the viewer. Now, the viewer will be able to watch and interact with longer form 3D content on multiple immersive displays.

For this demo, we will employ both Unreal and Unity game engines as clients. Over 90% of developers and content providers creating content for immersive displays are using these game engines to create content, so this is a major step towards bringing 3D streaming to a wider audience. The use of Unreal and Unity runtimes paves the way for targeting current and future light field based holographic displays such as the recently announced SolidLight display from IDEA founding member Light Field Lab.

ITMF scenes, in this case designed using OTOY’s OctaneRender, are decoded using IDEA’s open source library, libitmf, and delivered over the network during the demo.

This method is unique in that our pipeline will demonstrate the possibility of authoring in popular 3D applications like Blender, OctaneRender, Cinema 4D, Autodesk 3D Max, and Maya, and then being able to translate the content to target Unreal or Unity runtime clients streamed across a network.

To enable this functionality, our client detects the GPU and CPU of the device and provides this information to the “network orchestrator,” which is our tool for sending the correct asset stream based on the hardware capabilities of the device.

This demonstration will show that there is a real pipeline for developers and content providers to stream 3D content to immersive displays using the ITMF format. As GPU’s continue to increase in capability across the industry on mobile and desktop devices, the ability to stream 3D content is now a reality.

In many cases, streaming 3D has multiple benefits over more traditional pixel based streaming methods, including allowing the 3D artist or developer to have more granular control of content based on device type and GPU rendering ability. For example, instead of using real-world cameras to capture and transmit information, we can use virtual cameras, which can provide either dynamic camera positions or hundreds of virtual camera positions.

The repurposing of 3D objects in multiple scenes is another example. The ability to leverage the power of GPUs to manipulate 3D content locally provides many benefits for both the user and the developer. In addition, this method also lowers input latency and bitrate, and allows reuse of assets, as well as several other advantages which will be demonstrated on May 4th.

Imagine being able to create content in a popular 3D application, export the project, and then be able to target multiple devices for lossless streaming across a network with each device receiving the optimal content for the GPU of each device.

To see the future of 3D interoperability, and streaming 3D from existing CDNs and future network delivery technologies via Edge, 5G and 10G, we invite you to attend the demonstration from IDEA on May 4th.

Posted March 29, 2022